Cognition Envelopes for Bounded AI Reasoning in Autonomous sUAS Operations

2025 Submitted for Peer Review

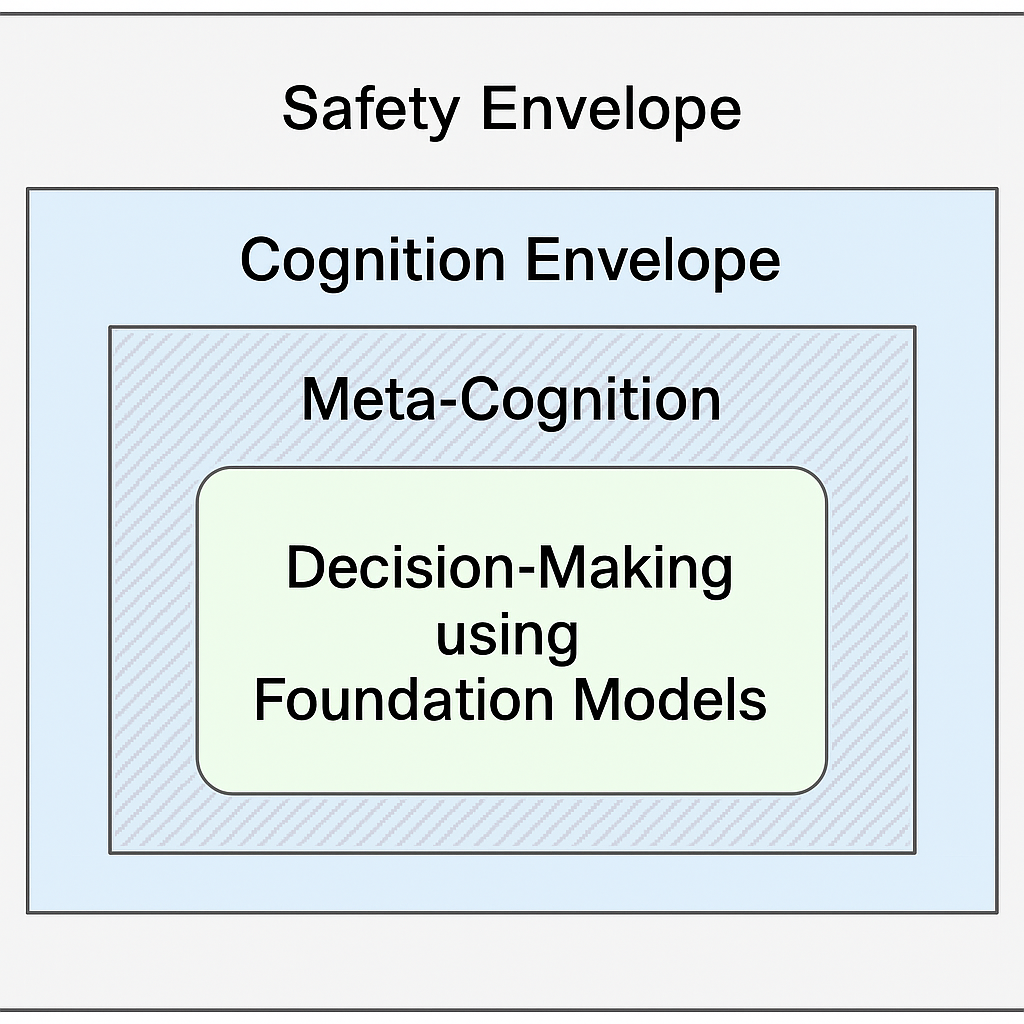

Cyber-physical systems increasingly rely on Foundational Models such as Large Language Models (LLMs) and Vision-Language Models (VLMs) to increase autonomy through enhanced perception, inference, and planning. However, these models also introduce new types of errors, such as hallucinations, overgeneralizations, and context misalignments, resulting in incorrect and flawed decisions. To address this, we introduce the concept of Cognition Envelopes, designed to establish reasoning boundaries that constrain AI-generated decisions while complementing the use of meta-cognition and traditional safety envelopes. As with safety envelopes, Cognition Envelopes require practical guidelines and systematic processes for their definition, validation, and assurance.

@article{2025_Cognition_Envelopes,

author = {Alarc{\'o}n Granadeno, Pedro Antonio

and Russell Bernal, Arturo Miguel

and Nelson, Sofia and Hernandez, Demetrius

and Petterson, Maureen and Murphy, Michael

and Scheirer, Walter J and Cleland-Huang, Jane},

title = {Cognition Envelopes for Bounded AI Reasoning

in Autonomous {sUAS} Operations},

journal = {arXiv e-prints},

pages = {arXiv--2510},

year = {2025}

}

SkyTouch: Augmenting Situational Awareness for Multi-sUAS Pilots through Multi-Modal Haptics

2025 Adjunct Proceedings of the 38th Annual ACM Symposium on User Interface Software and Technology (UIST Adjunct '25)

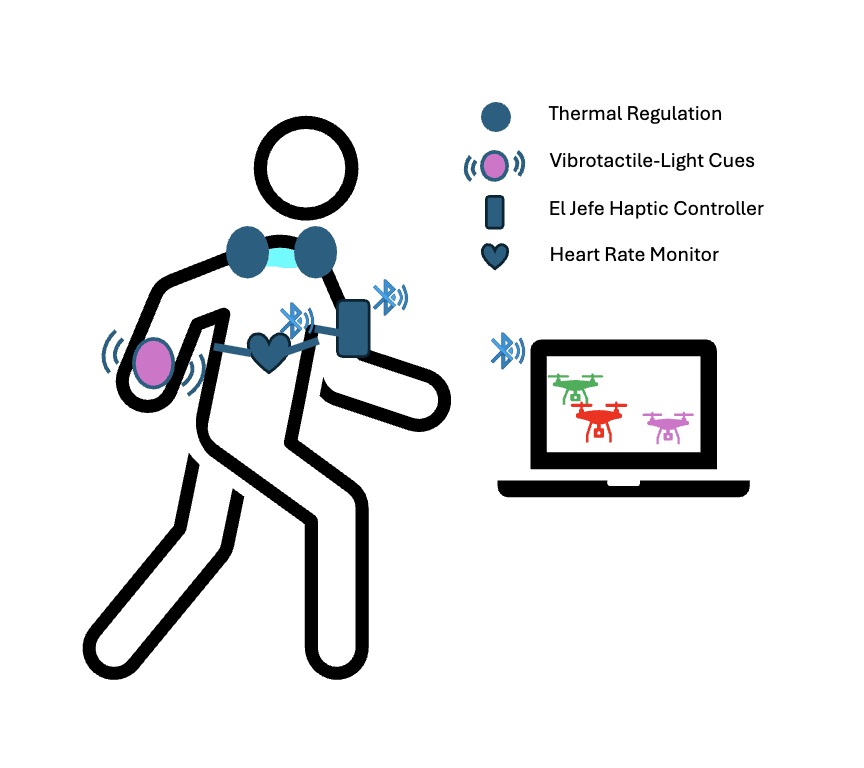

Small Unmmaned Aircraft Systems' (sUAS; e.g., drones) pilots are often overwhelmed by data-heavy interfaces in critical missions such as Search and Rescue (SAR), resulting in high cognitive load and increased risk of error, especially in multi-sUAS scenarios. We introduce SkyTouch, a wearable haptic system that enhances the human-sUAS interface by translating complex data streams into an intuitive, peripheral language of sensation, establishing a novel "felt" connection between pilot and sUAS. Our system delivers thermal feedback on the neck to help regulate operator's emotional state and wrist-based vibrotactile-light cues to guide visual attention toward critical alerts without cognitive overhead. Through this multi-modal dialogue of feeling, SkyTouch moves beyond basic alerts to support a deeper situational awareness and foster more resilient human-machine collaboration.

@inproceedings{2025_SkyTouch,

author = {Olesk, Johanna and Russell Bernal, Arturo Miguel},

title = {SkyTouch: Augmenting Situational Awareness for

{Multi-sUAS} Pilots through Multi-Modal Haptics},

year = {2025},

isbn = {9798400720369},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3746058.3758973},

doi = {10.1145/3746058.3758973},

booktitle = {Adjunct Proceedings of the 38th Annual ACM

Symposium on User Interface Software

and Technology},

articleno = {69},

numpages = {3},

series = {UIST Adjunct '25}

}

Validating Terrain Models in Digital Twins for Trustworthy sUAS Operations

2025 International Conference on Engineering Digital Twins (EDTconf)

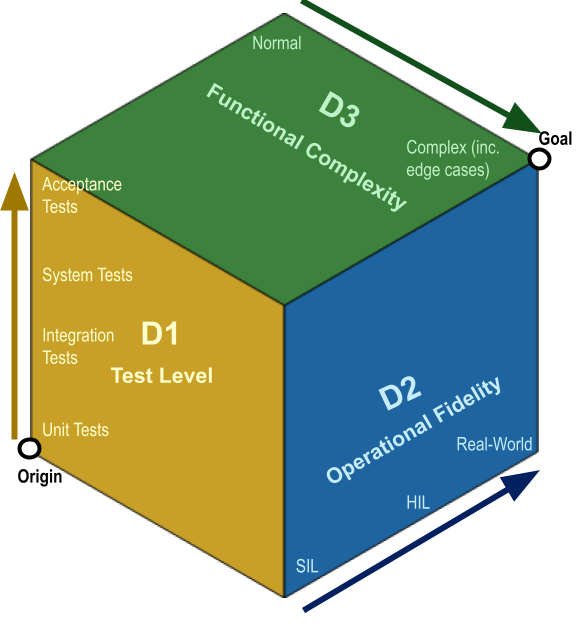

With the increasing deployment of small Unmanned Aircraft Systems (sUAS) in unfamiliar and complex envi- ronments, Environmental Digital Twins (EDT) that comprise weather, airspace, and terrain data are critical for safe flight planning and for maintaining appropriate altitudes during search and surveillance operations. With the expansion of sUAS capabil- ities through edge and cloud computing, accurate EDT are also vital for advanced sUAS capabilities, like geolocation. However, real-world sUAS deployment introduces significant sources of uncertainty, necessitating a robust validation process for EDT components. This paper focuses on the validation of terrain models, one of the key components of an EDT, for real-world sUAS tasks. These models are constructed by fusing U.S. Geolog- ical Survey (USGS) datasets and satellite imagery, incorporating high-resolution environmental data to support mission tasks. Validating both the terrain models and their operational use by sUAS under real-world conditions presents significant challenges, including limited data granularity, terrain discontinuities, GPS and sensor inaccuracies, visual detection uncertainties, as well as onboard resources and timing constraints. We propose a 3- Dimensions validation process grounded in software engineering principles, following a workflow across granularity of tests, simulation to real world, and the analysis of simple to edge conditions. We demonstrate our approach using a multi-sUAS platform equipped with a Terrain-Aware Digital Shadow.

@inproceedings{2025_Validating_Terrain_Models,

author = {Russell Bernal, Arturo Miguel

and Petterson, Maureen

and Alarcon Granadeno, Pedro Antonio

and Murphy, Michael and Mason, James

and Cleland-Huang, Jane

},

title = {Validating Terrain Models in Digital Twins

for Trustworthy {sUAS} Operations},

month = {October},

year = {2025},

booktitle = {2nd International Conference on Engineering Digital Twins {(EDTconf 2025)}}

}

Psych-Occlusion: Using Visual Psychophysics for Aerial Detection of Occluded Persons During Search and Rescue

2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)

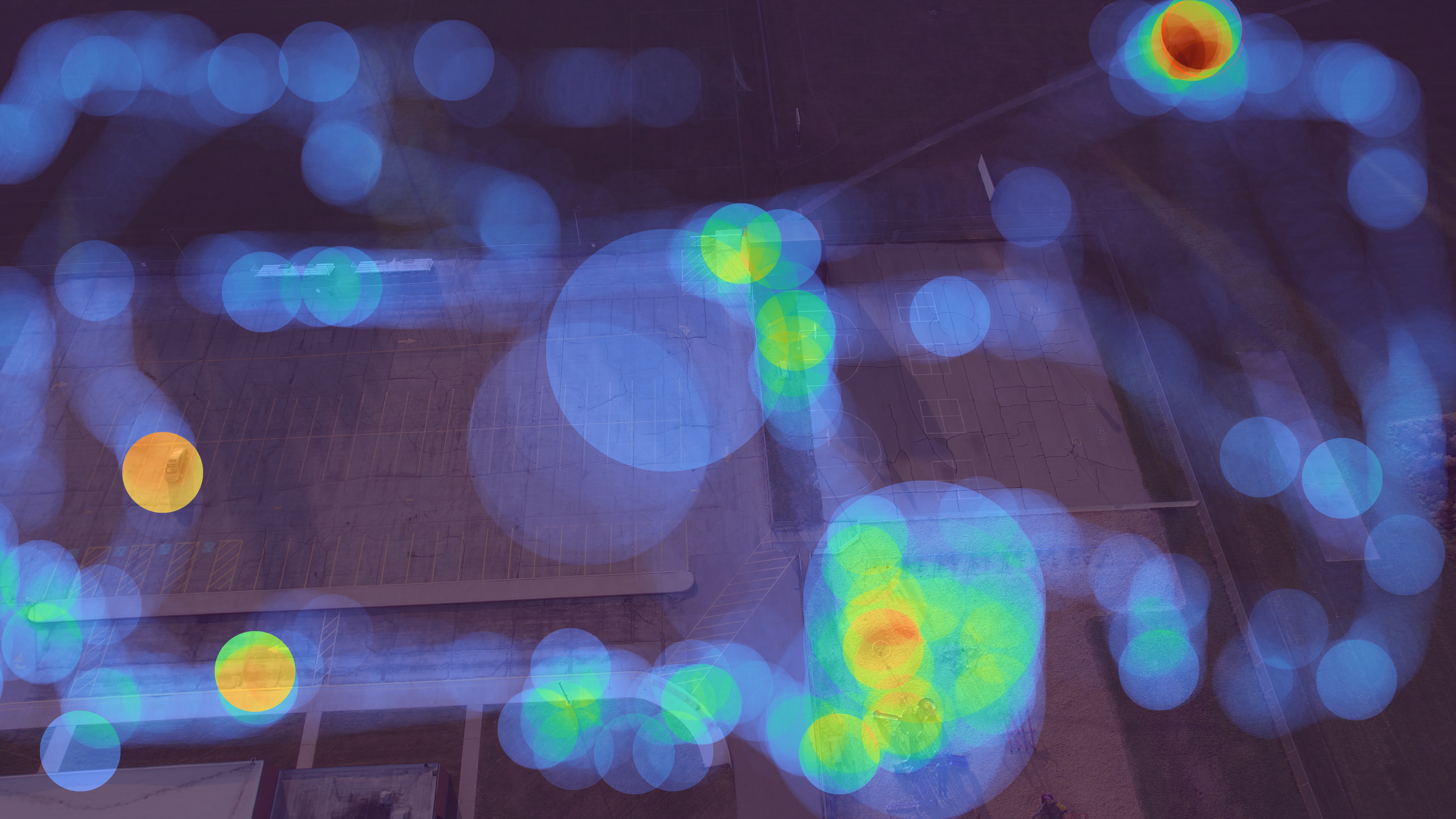

The success of Emergency Response (ER) scenarios, such as search and rescue, is often dependent upon the prompt location of a lost or injured person. With the increasing use of small Unmanned Aerial Systems (sUAS) as “eyes in the sky” during ER scenarios, efficient detection of persons from aerial views plays a crucial role in achieving a successful mission outcome. Fatigue of human operators during prolonged ER missions, coupled with limited human resources, highlights the need for sUAS equipped with Computer Vision (CV) capabilities to aid in finding the person from aerial views. However, the performance of CV models onboard sUAS substantially degrades under real-life rigorous conditions of a typical ER scenario, where person search is hampered by occlusion and low target resolution. To address these challenges, we extracted images from the NOMAD dataset and performed a crowdsource experiment to collect behavioural measurements when humans were asked to “find the person in the picture”. We exemplify the use of our behavioral dataset, Psych-ER, by using its human accuracy data to adapt the loss function of a detection model. We tested our loss adaptation on a RetinaNet model evaluated on NOMAD against increasing distance and occlusion, with our psychophysical loss adaptation showing improvements over the baseline at higher distances across different levels of occlusion, without degrading performance at closer distances. To the best of our knowledge, our work is the first human-guided approach to address the location task of a detection model, while addressing real-world challenges of aerial search and rescue.

@InProceedings{2025_Psych_ER,

author = {Russell Bernal, Arturo Miguel

and Cleland-Huang, Jane

and Scheirer, Walter},

title = {Psych-Occlusion: using Visual Psychophysics

for Aerial Detection of Occluded Persons

during Search and Rescue},

booktitle = {Proceedings of the Winter Conference

on Applications of Computer Vision {(WACV)}},

month = {February},

year = {2025},

pages = {3383-3395}

}

NOMAD: A Natural, Occluded, Multi-Scale Aerial Dataset, for Emergency Response Scenarios

2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)

With the increasing reliance on small Unmanned Aerial Systems (sUAS) for Emergency Response Scenarios, such as Search and Rescue, the integration of computer vision capabilities has become a key factor in mission success. Nevertheless, computer vision performance for detecting humans severely degrades when shifting from ground to aerial views. Several aerial datasets have been created to mitigate this problem, however, none of them has specifically addressed the issue of occlusion, a critical component in Emergency Response Scenarios. Natural, Occluded, Multi-scale Aerial Dataset (NOMAD) presents a benchmark for human detection under occluded aerial views, with five different aerial distances and rich imagery variance. NOMAD is composed of 100 different Actors, all performing sequences of walking, laying and hiding. It includes 42,825 frames, extracted from 5.4k resolution videos, and manually annotated with a bounding box and a label describing 10 different visibility levels, categorized according to the percentage of the human body visible inside the bounding box. This allows computer vision models to be evaluated on their detection performance across different ranges of occlusion. NOMAD is designed to improve the effectiveness of aerial search and rescue and to enhance collaboration between sUAS and humans, by providing a new benchmark dataset for human detection under occluded aerial views.

@InProceedings{2024_NOMAD,

author = {Russell Bernal, Arturo Miguel

and Scheirer, Walter

and Cleland-Huang, Jane},

title = {{NOMAD}: A Natural, Occluded, Multi-Scale Aerial

Dataset, for Emergency Response Scenarios},

booktitle = {Proceedings of the IEEE/CVF Winter Conference

on Applications of Computer Vision {(WACV)}},

month = {January},

year = {2024},

pages = {8584-8595}

}

An Environmentally Complex Requirement for Safe Separation Distance Between UAVs

2024 IEEE 32nd International Requirements Engineering Conference Workshops (REW)

Cyber-Physical Systems (CPS) interact closely with their surroundings. They are directly impacted by their physical and operational environment, adjacent systems, user interactions, regulatory codes, and the underlying development process. Both the requirements and design are highly dependent upon assumptions made about the surrounding world, and therefore environmental assumptions must be carefully documented, and their correctness validated as part of the iterative requirements and design process. Prior work exploring environmental assumptions has focused on projects adopting formal methods or building safety assurance cases. However, we emphasize the important role of environmental assumptions in a less formal software development process, characterized by natural language requirements, iterative design, and robust testing, where formal methods are either absent or used for only parts of the specification. In this paper, we present a preliminary case study for dynamically computing the safe minimum separation distance between two small Uncrewed Aerial Systems based on drone characteristics and environmental conditions. In contrast to prior community case studies, such as the mine pump problem, patient monitoring system, and train control system, we provide several concrete examples of environmental assumptions, and then show how they are iteratively validated at various stages of the requirements and design process, using a combination of simulations, field-collected data, and runtime monitoring.

© 2025 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works. For the version of record, see IEEE Xplore.

@INPROCEEDINGS{2024_envire,

author = {Alarcon Granadeno, Pedro Antonio

and Russell Bernal, Arturo Miguel

and Al Islam, Md Nafee

and Cleland-Huang, Jane},

booktitle = {2024 IEEE 32nd International Requirements

Engineering Conference Workshops (REW)},

title = {An Environmentally Complex Requirement

for Safe Separation Distance Between UAVs},

year = {2024},

pages = {166-175},

doi = {10.1109/REW61692.2024.00028}

}

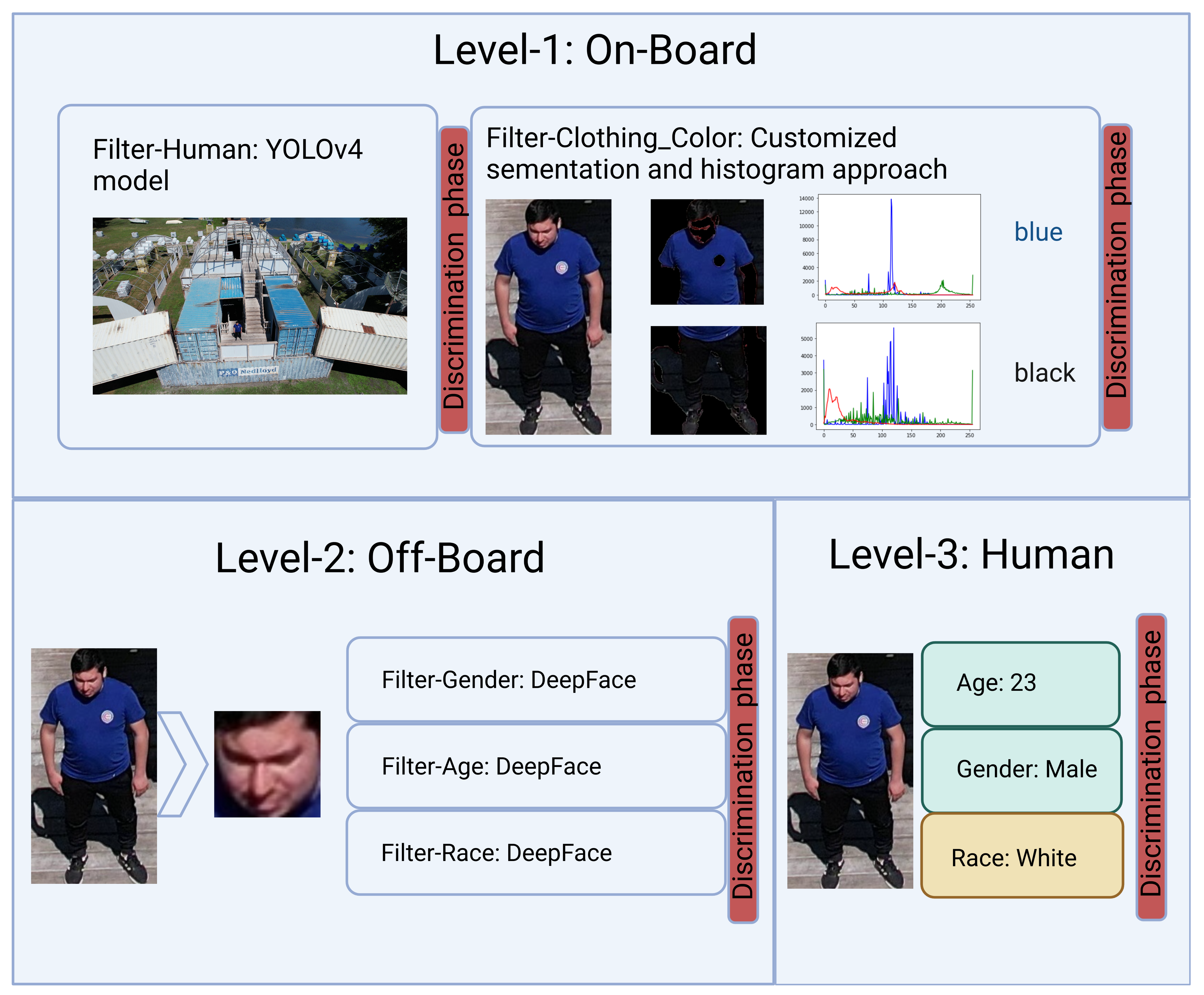

Hierarchically Organized Computer Vision in Support of Multi-Faceted Search for Missing Persons

2023 IEEE International Conference on Automatic Face and Gesture Recognition (FG)

Missing person searches are typically initiated with a description of a person that includes their age, race, clothing, and gender, possibly supported by a photo. Unmanned Aerial Systems (sUAS) imbued with Computer Vision (CV) capabilities, can be deployed to quickly search an area to find the missing person; however, the search task is far more difficult when a crowd of people is present, and only the person described in the missing person report must be identified. It is particularly challenging to perform this task on the potentially limited resources of an sUAS. We therefore propose AirSight, as a new model that hierarchically combines multiple CV models, exploits both onboard and off-board computing capabilities, and engages humans interactively in the search. For illustrative purposes, we use AirSight to show how a person's image, extracted from an aerial video can be matched to a basic description of the person. Finally, as a work-in-progress paper, we describe ongoing efforts in building an aerial dataset of partially occluded people and physically deploying AirSight on our sUAS.

@INPROCEEDINGS{2023_Vision_Pipeline,

author = {Russell Bernal, Arturo Miguel

and Cleland-Huang, Jane},

booktitle = {2023 IEEE 17th International Conference on

Automatic Face and Gesture Recognition {(FG)}},

title ={Hierarchically Organized Computer Vision in Support

of Multi-Faceted Search for Missing Persons},

year = {2023},

pages = {1-7},

doi = {10.1109/FG57933.2023.10042698}

}